VOICE BOND

Personal Academic work

May2022 - Dec2022

Keywords

Remote Interaction, Wearable Device, Sound Visualization

Technique

Sound localization, UWB, Touch Designer, MR Passthrough

The goal of this project is to create an auditory virtual reality space where hearing is the main subject and visual effects are the expression.The auditory experience is a comprehensive spatial perception relative to the listener, and this project hopes to examine the relationship between the auditory subject and the auditory environment, to understand listening as a process of environmental exploration and construction, and to consider the auditory experience as a real-time construction of "reality".

How to create a sense of presence in remote communication by reproducing the ambient sound field through sound visualization?

1.Background

In two spaces that are completely separated, the sound fields cannot interoperate and people in the two spaces cannot perceive each other's spatial environment. Even if voice and video calls can be made through modern electronic devices (such as cell phones and computers) to meet basic communication needs, the sense of presence and experience for spatial immersion is still basically zero.

2.Project Concept

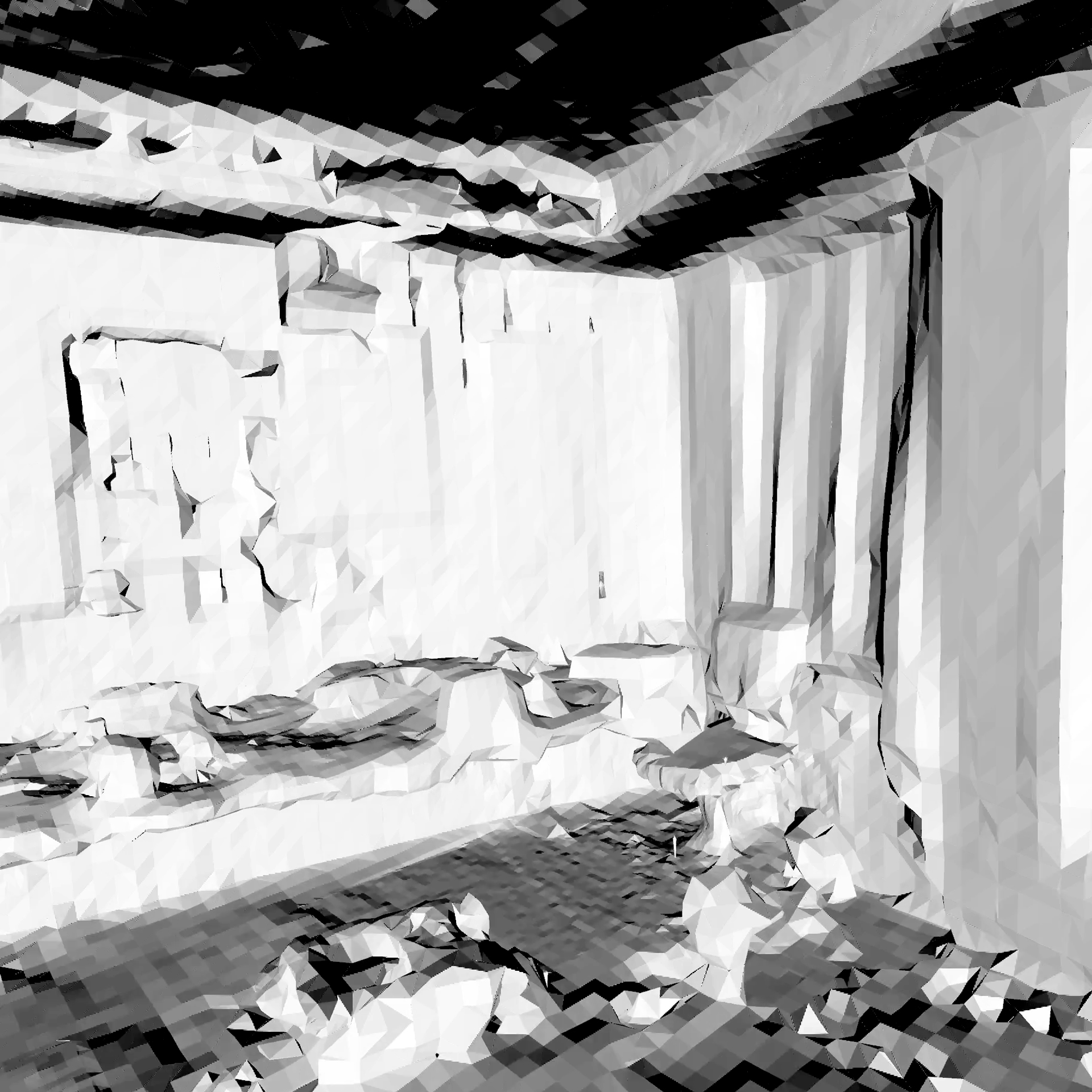

Restore the spatial sound field in a way similar to the LIDAR scanning spatial model.

3D Scanner

Sound Field Scanner

--------------------->

3.Project Logical Chain

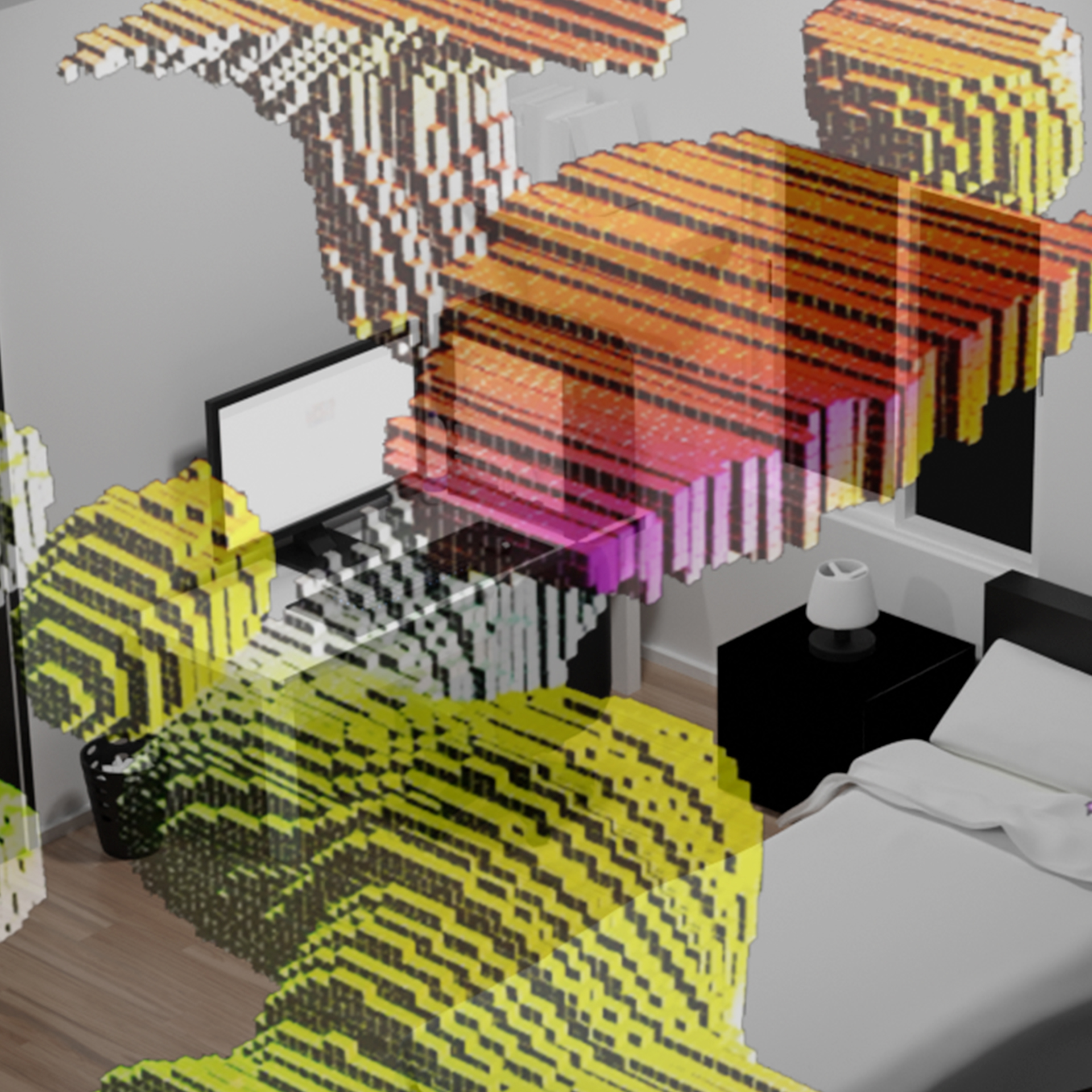

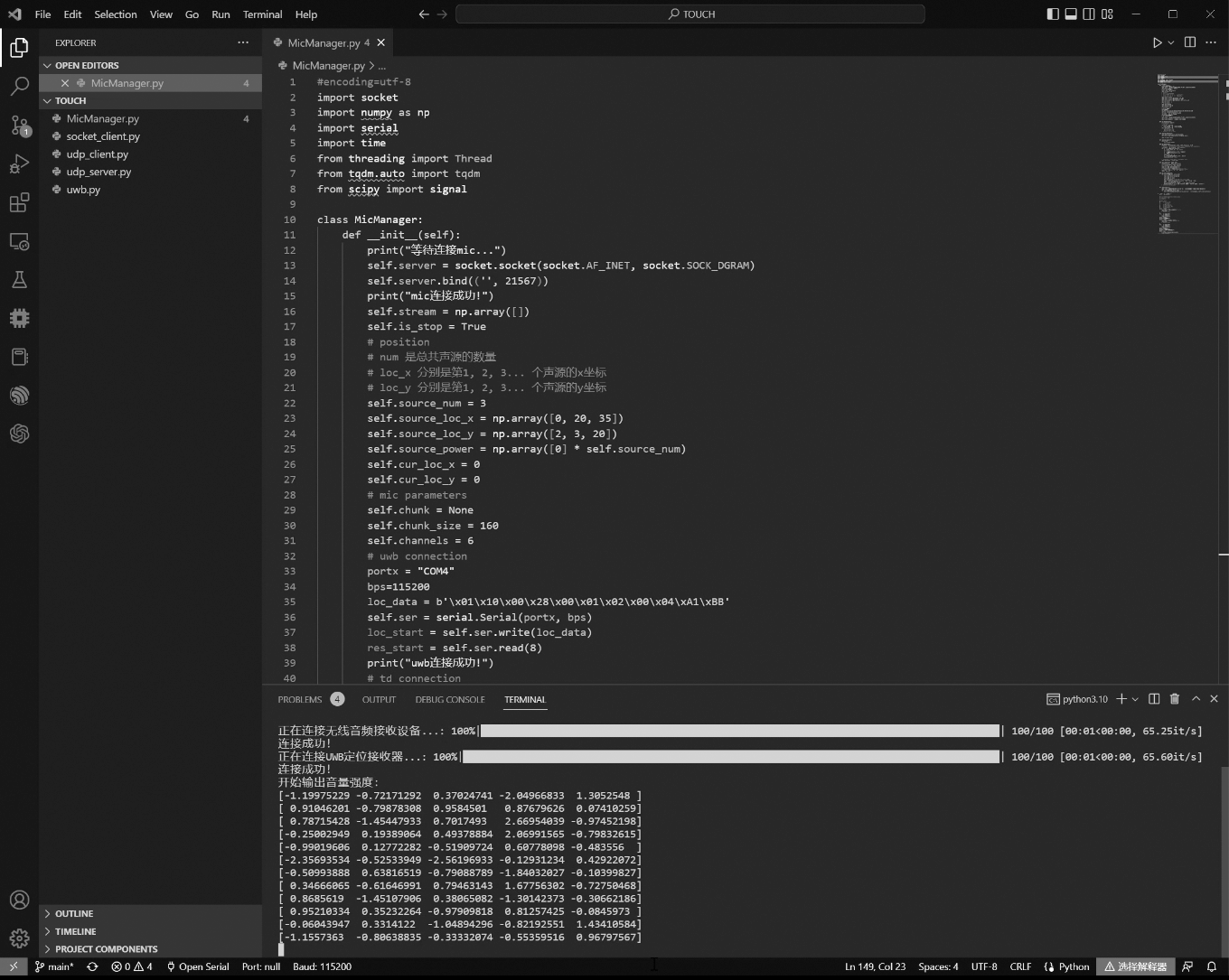

In two separate spaces, I collected sound data and human walking path trajectory coordinates in the first space (called space A); in the second space (called space B), I used touch designer to process the sound field data and human walking path data in space A, visualize the sound field (in 2D and 3D), and connect the visual effects to the virtual headset (Oculus Quest2) so that the experience in space B can feel the sound field in space A in real time, thus creating a cross-space connection.

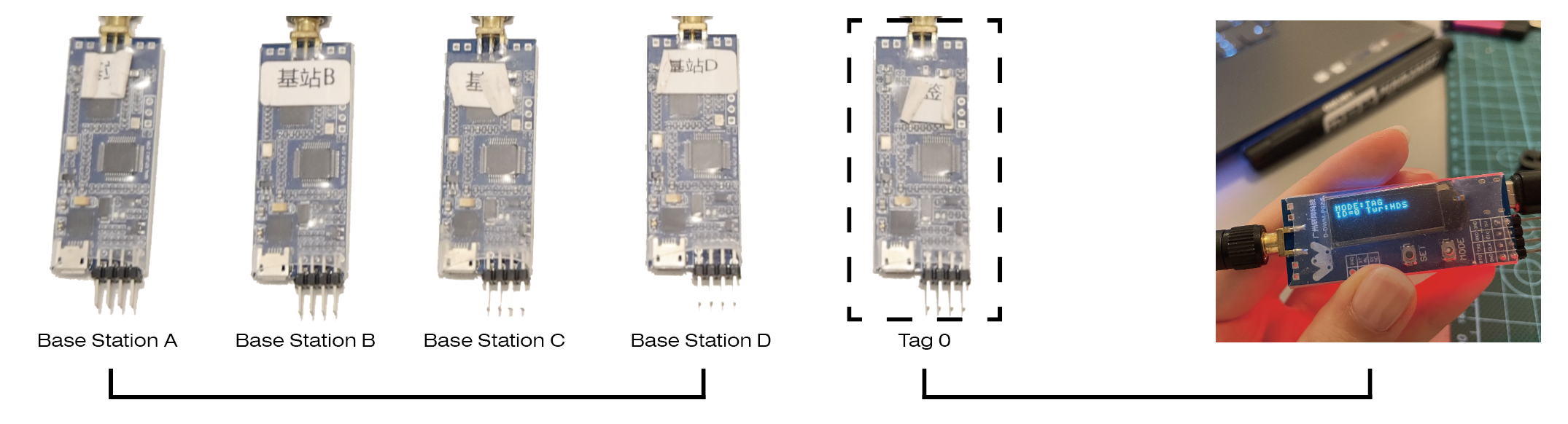

4.Human Path Positioning

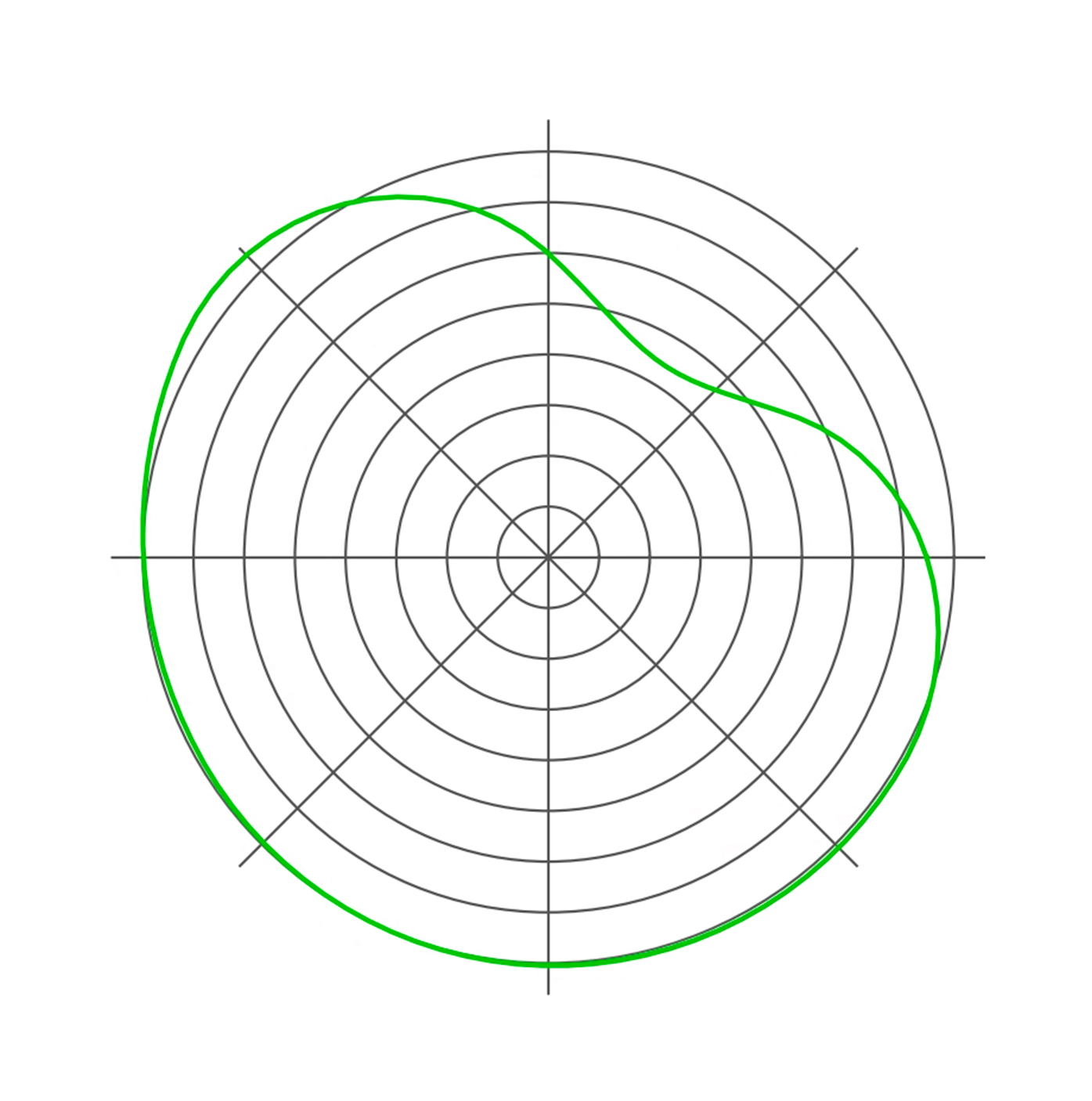

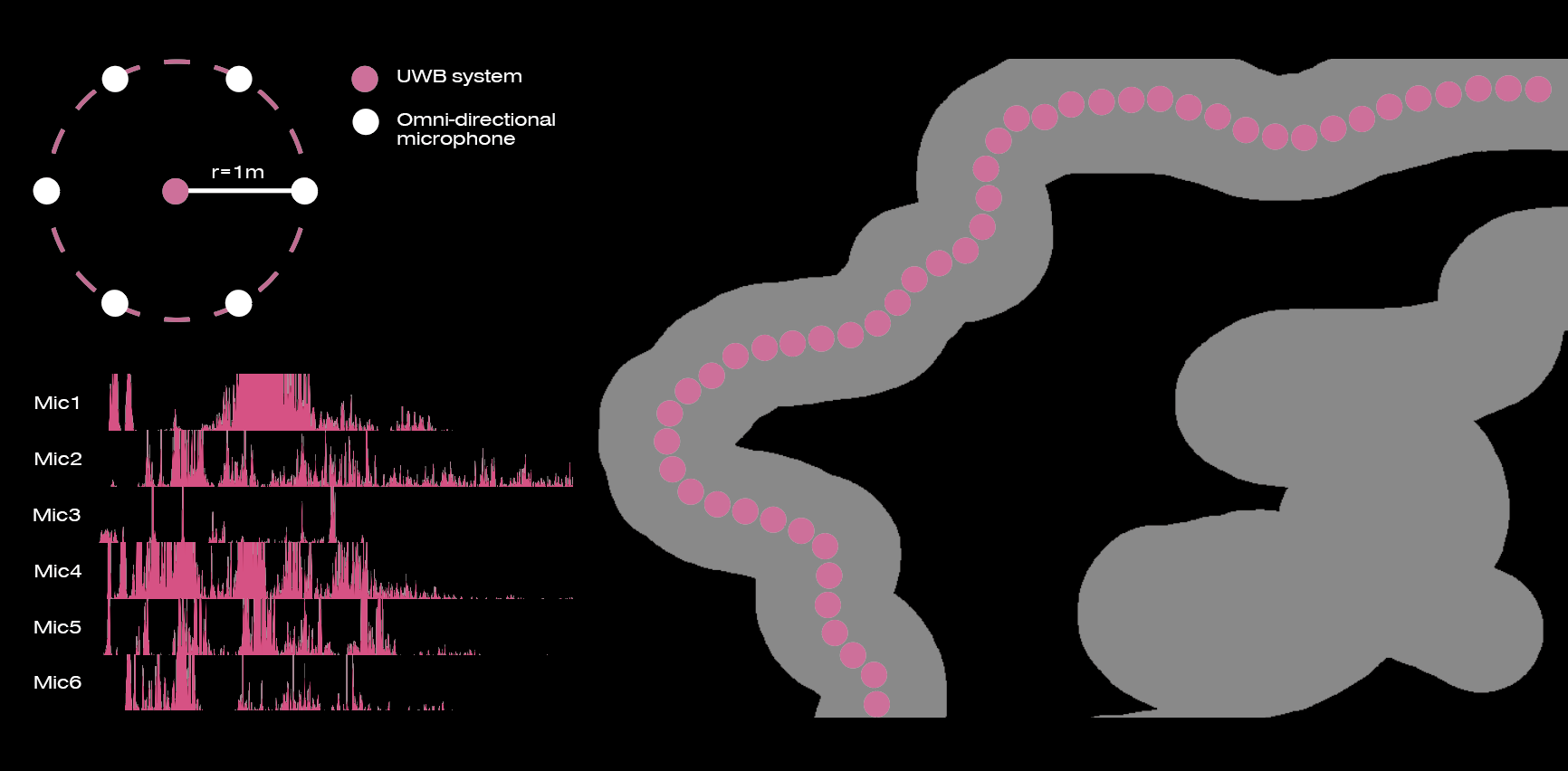

4.Human Path PositioningThe UWB system is used to locate a person's position in space and thus obtain a continuous trajectory of movement.

Base Station Coverage

Human coordinate record view

Coordinate data output in real time

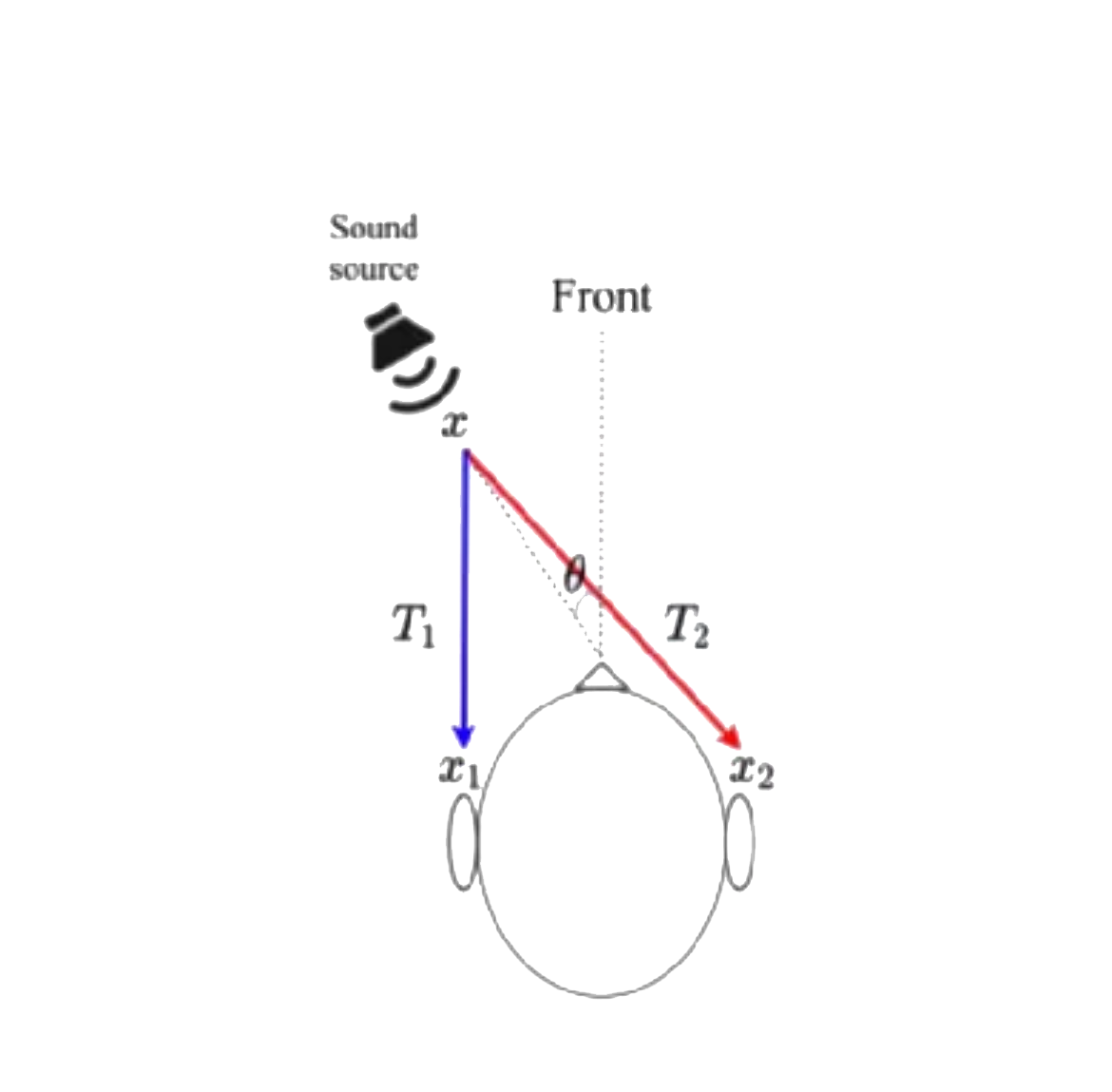

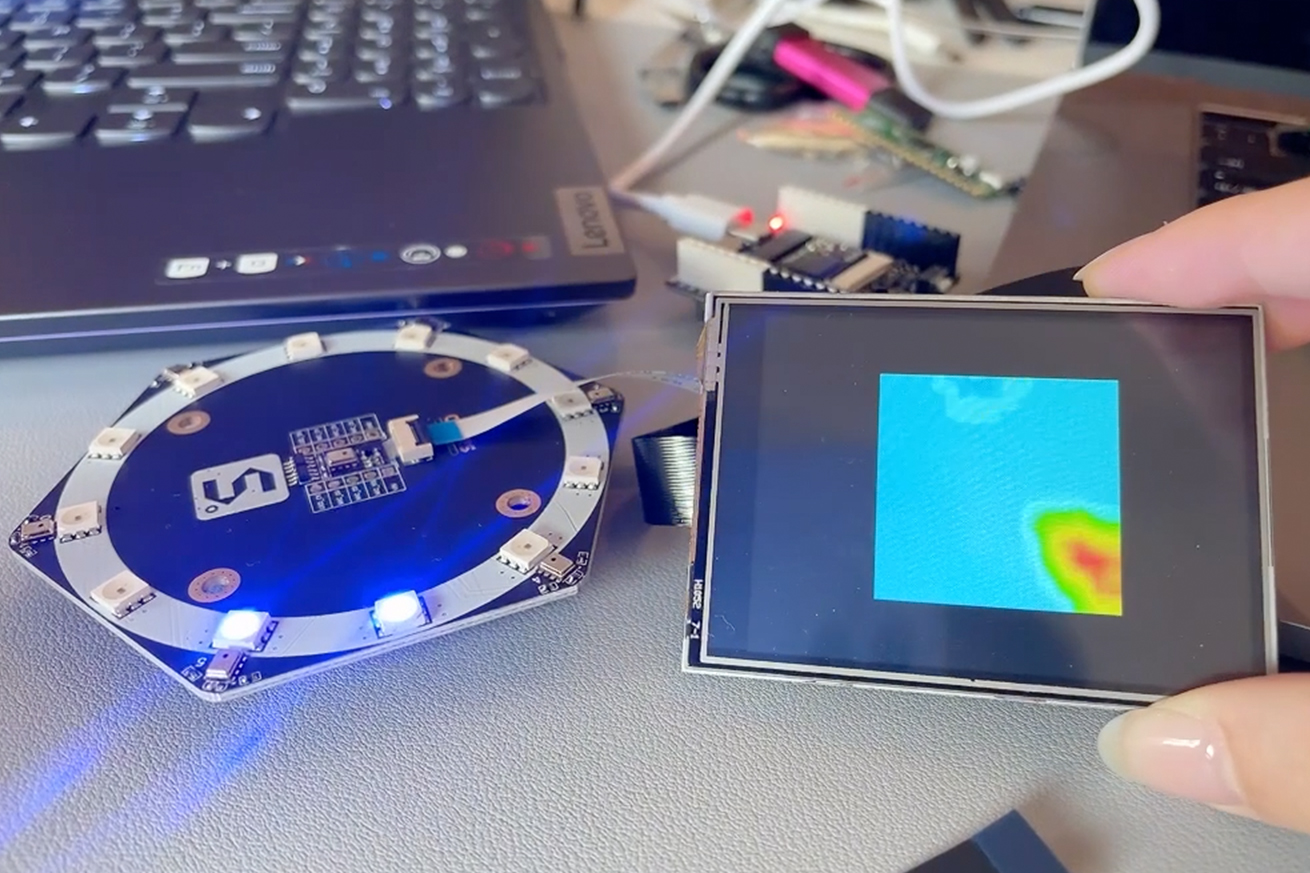

5.Sound Source Localization

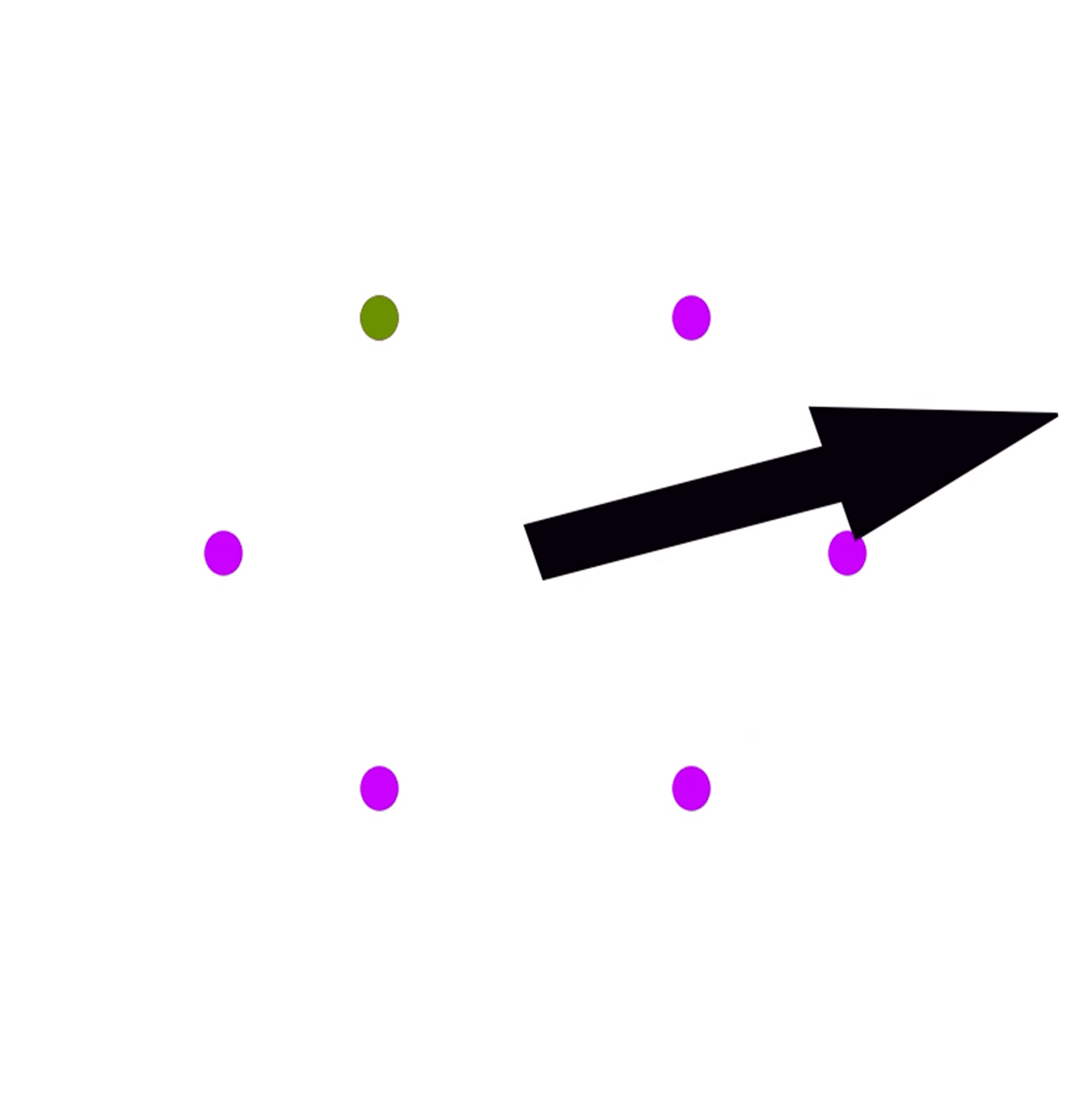

Multi-point sound localization in 2D

Binaural positioning principle diagram

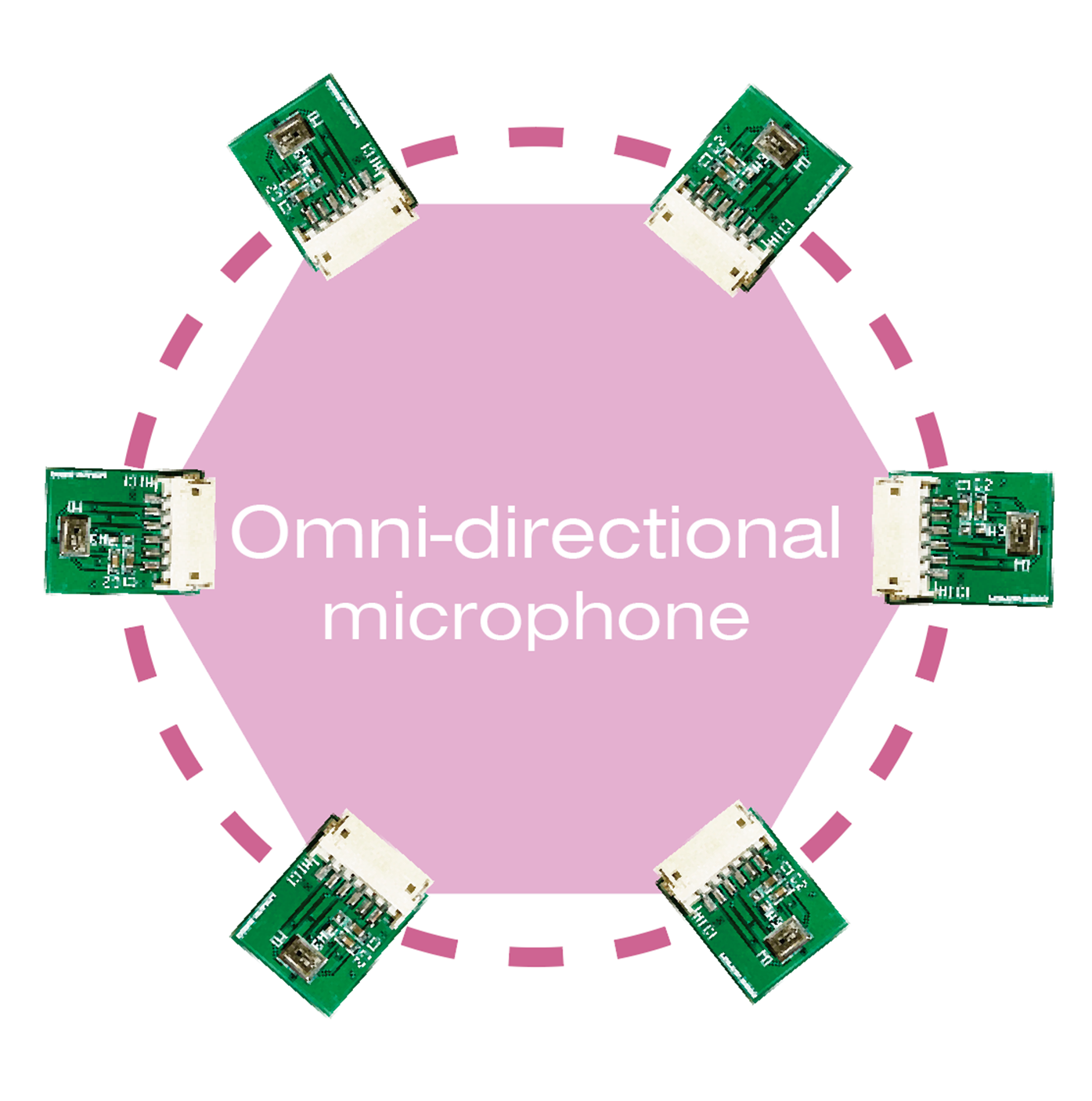

Collects sound data from the space where it is located via omnidirectional microphones.

Sound Information Output

Sound Orientation

Sound Intensity

Sound Location

Part of the code to implement multi-point sound source localization

Schematic Diagram

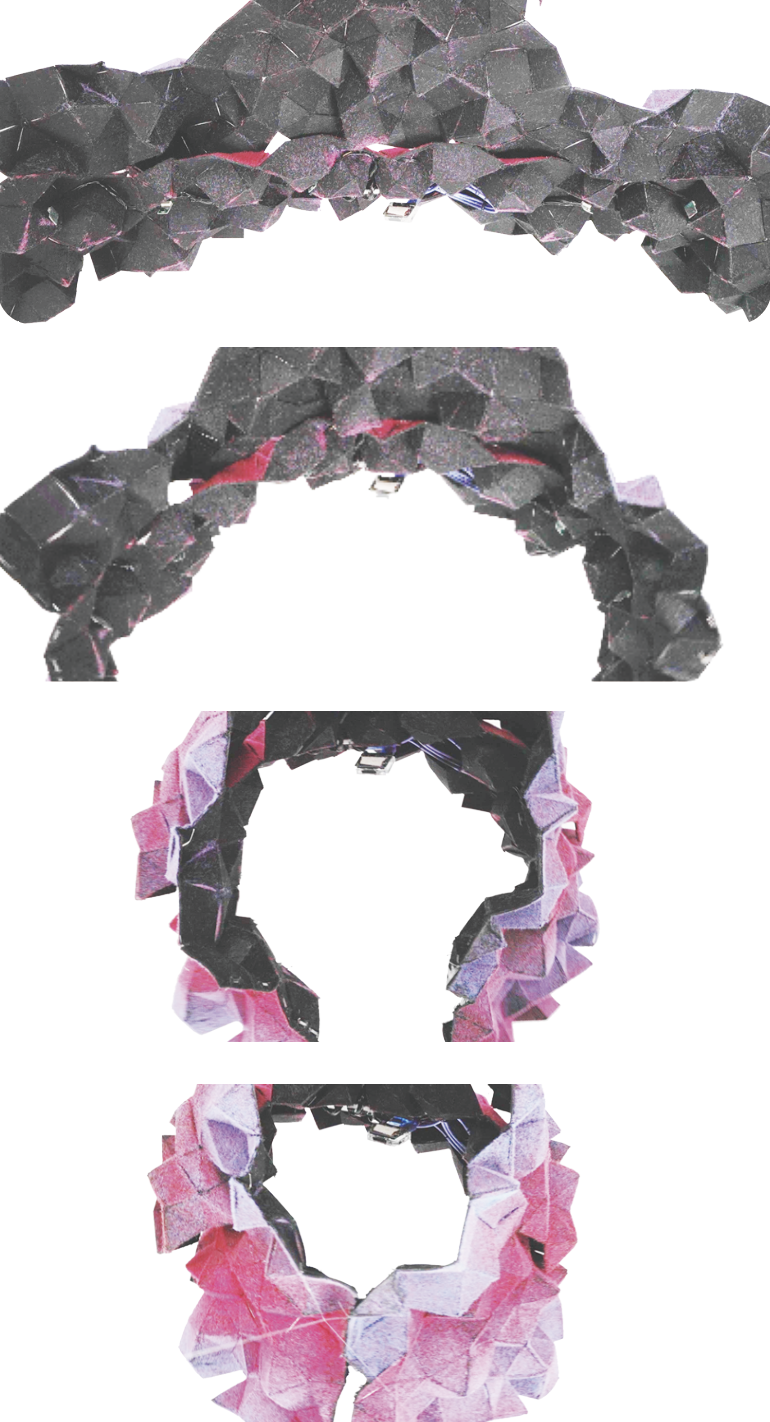

6.Wearable Device Design

Integration of acoustic source localization system and UWB localization system with one garment

In 2D space, the most favorable microphone array layout shape for radio is a square circle. Design the garment structure based on Archimedes polygons.

Archimedes polygons -------> layout plan

Modular Design and 3D Tailoring

Using three-dimensional cutting and flocking process to complete the garment production.

When the wearer puts the garment on, the omnidirectional microphones embedded in the garment will be arranged in a positive circle to meet the optimal microphone array.

Clothing on the body effect

Wearable Device Display

Garment front plan

Back view of garment with electronic equipment layout

7.Sound Field Reproduction and Information Visualization

Explanation of Cross-space Communication Instruction

︎︎︎BACK NEXT︎︎︎